不满足于围棋,DeepMind人工智能又成游戏高手

|

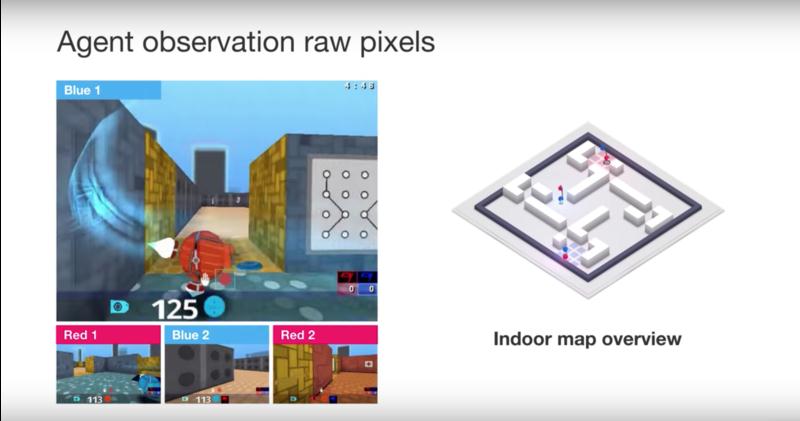

两年前,DeepMind创造的人工智能在围棋上打败了世界冠军,一举占据了新闻头条。如今这家Alphabet子公司的另一个程序又掌握了流行的多人电子游戏《雷神之锤》(Quake)的玩法。 DeepMind于上周二表示,他们开发的创新和强化学习技术,可以让人工智能系统在《雷神之锤3:竞技场》(Quake III Arena)夺旗战中的表现达到人类玩家的水平。 DeepMind表示,之所以让人工智能学习玩夺旗战,是将此当作一项练习。在这一游戏中,它们需要独立行动,并学会互相配合。DeepMind在博文中表示:“这是一项极其艰巨的难题,因为在它们不断合作的同时,地图也在不断发生变化。” 《雷神之锤3:竞技场》是一款第一人称射击游戏,规则很简单:两个团队要保护自己的旗帜,夺取对手的旗帜,但最后的结果可能很复杂。游戏要求玩家(按照人工智能领域的说法,叫智能体)与团队成员合作,在一系列不断变化的地图中与对手竞争。 DeepMind表示,智能体从未接受过关于游戏规则的指导,但它们却能以“非常高的水平”掌握游戏。在一场由人工智能玩家与40位人类玩家随机混合组队的锦标赛中,人工智能玩家迅速掌握了窍门,胜率超越了人类玩家。更可怕的是,人类玩家认为人工智能玩家在合作度上优于人类队友。 DeepMind在博客上写道:“实际上,智能体会学习类似人类的行为,例如跟随队友,并在对手的基地安营扎寨。总体来说,我们认为这项工作凸显了多智能体训练在促进人工智能发展上的潜力。”(财富中文网) 译者:严匡正 |

Two years ago, DeepMind drew headlines by creating an AI system that defeated the world champion of the game Go. Now another program at the Alphabet subsidiary has learned how to play the popular multiplayer video game Quake. DeepMind said last Tuesday that it had developed innovations and reinforcement learning that enabled an artificial-intelligence system to achieve human-level performance in Quake III Arena’s Capture the Flag, a 3-D first-person multiplayer game. DeepMind said that learning to play Capture the Flag was intended as an exercise in which several individual agents must act independently, while learning to interact incorporate with each other. “This is an immensely difficult problem — because with co-adapting agents the world is constantly changing,” DeepMind said in a blog post. Quake Arena III is a first-person shooter video game with simple rules — two teams protect their own flag while seizing that of their opponent — but also the potential for complex outcomes. The game requires players (or in AI parlance, agents) to cooperate with team members while competing with others amid a changing variety of maps. The agents were never instructed about the rules of the game, yet were able to learn the game “to a very high standard,” DeepMind said. In a tournament randomly mixing AI agents with 40 human players, the agents quickly learned to exceed the win rate of their flesh-and-blood counterparts. Even scarier, human players rated the agents as more collaborative teammates than other humans. “Agents in fact learn human-like behaviors, such as following teammates and camping in the opponent’s base,” DeepMind said on its blog. “In general, we think this work highlights the potential of multi-agent training to advance the development of artificial intelligence.” |