下次你在Zoom上打视频电话的时候,你可以让对方把手指放在鼻子旁边,或者让对方将侧脸对着镜头保持一分钟。

这些都是专家推荐的方法,以确保你的聊天对象是真人,而不是用深度伪造(Deepfake)技术生成的假形象。

这种防范措施虽然显得有些莫名其妙,但是我们本来就生活在一个奇怪的年代。

今年8月,加密货币交易所币安(Binance)的一名高管表示,曾经有骗子利用深度伪造技术假冒他的形象,用来对数个虚拟币项目实施电信诈骗。币安的沟通总监帕特里克·希尔曼称,曾经有诈骗分子在Zoom视频电话上假冒他的形象。(希尔曼并未提供相关证据证实他的说法,一些专家对此表示怀疑,但网络安全研究人员表示,这类事件是有可能发生的。)美国联邦调查局(FBI)在今年7月曾经警告道,有人可能会在网络求职遭遇时对方使用深度造假技术诈骗。一个月前,欧洲的几位市长表示,他们也被假冒的乌克兰总统弗拉基米尔·泽伦斯基骗了。更离谱的是,美国的一家名叫Metaphysic的初创公司开发了一款深度伪造软件,它在电视真人秀《美国达人秀》(America’s Got Talent)的决赛中,直接在观众面前,将几名歌手的脸无缝切换成了西蒙·考威尔等几位明星评委的脸,让所有人惊掉下巴。

深度伪造,是指利用使用人工智能技术,创建极具说服力的虚假图像和视频。以前要创假这样一个虚假形象,需要目标对象的大量照片,还需要很多时间和相当高超的编程和特效技术。即便假期脸被生成出来了,以前的AI模型的响应速度也不够快,无法实时生成视频直播级的完美假脸。

然而从币安和《美国达人秀》的例子能够看出,现在的情况已经不同了,人们在实时视频传输中使用深度伪造技术已经越来越容易了,而且此类软件现在也是唾手可得,很多还是免费的,用起来也没有什么技术门槛。这也为各种各样的电信诈骗甚至政治谣言提供了可能。

加州大学伯克利分校(University of California at Berkeley)的计算机科学家哈尼·法里德是视频分析和认证领域的专家。他感叹道:“我对现在实时深度伪技术造的速度和质量感到惊讶。”他表示,现在至少有三种不同的开源程序可以让人们制作实时深度造假视频。

法里德等专家都担心深度伪造技术会使电信诈骗发展到一个新高度。“这简直就像给网络钓鱼诈骗打了兴奋剂。”他说。

识别深度造假的小技巧

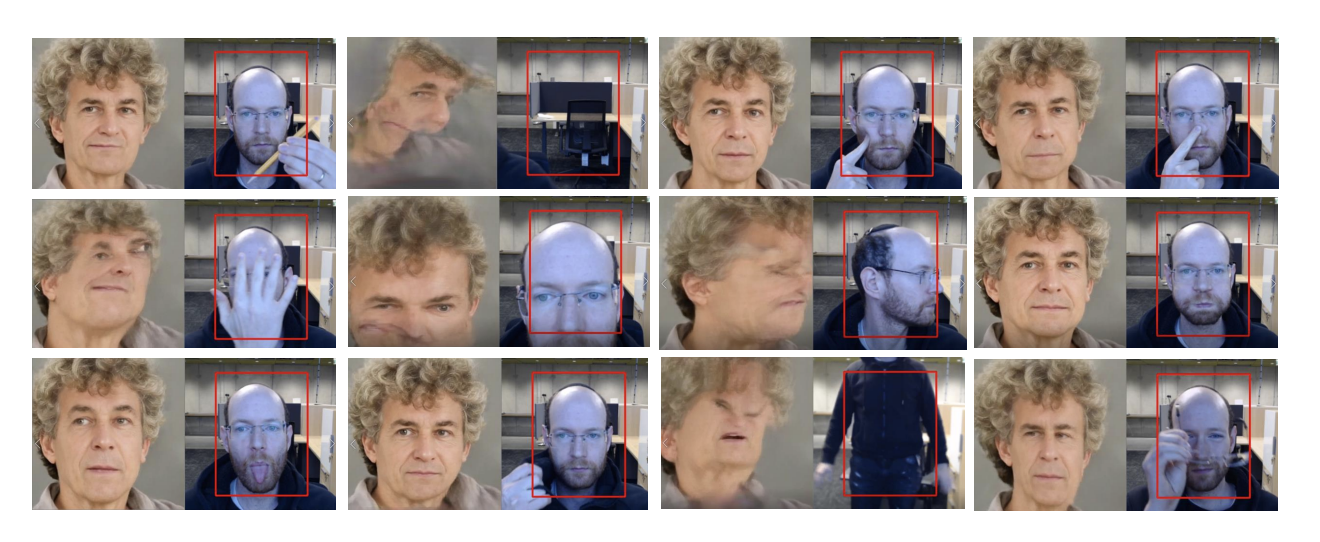

好在专家表示,目前还是有很多小技巧能够帮助你拆穿骗子的画皮。最可靠也最简单的方法,就是让对方侧过脸去,让镜头捕捉他的完整侧脸。深度伪造技术目前还无法保证侧面不露破绽,最主要的原因就是很难获取足够多的侧面照片来训练AI模型。虽然有一些方法可以通过正面图像推导出侧面形象,但这会大大增加生成图像过程的复杂性。

另外,深度伪造软件还利用了人脸上的“锚点”,来将深度伪造的“面具”匹配到人脸上。所以只需要让对方转头90度,就会导致一半的锚点不可见,这通常就会导致图像扭曲、模糊、变形,非常容易注意到。

位于以色列的本古里安大学(Ben-Gurion University)进攻性人工智能实验室(Offensive AI Lab)的负责人伊斯罗尔·米尔斯基还通过试验发现了很多能够检测出深度伪造的方法。比如在视频通话过程中要求人们随便拿一个东西在脸前划过,让某个东西在他面前反弹一下,让他们整理一下自己的衬衫,摸一下头发,或者用手遮挡半张脸。以上每一种办法,都会导致深度造假软件无法描绘多出来的物体,或者导致人脸严重失真。对于音频深度造假,米尔斯基建议你可以要求对方吹口哨,或者换一种口音说话,或者随机挑一首曲子让对方哼唱。

米尔斯基指出:“目前所有现有的深度伪造技术都采用了非常类似的协议。它们虽然接受了大量数据的训练,但其模式是非常特定的。多数软件只能模仿人的正脸,而处理不好侧面或者遮挡脸部的物体。”

法里德也展示了一种检测深度伪造的方法,那就是用一个简单的软件程序,让对方的电脑屏幕以某种模式闪烁,让电脑屏幕在对方脸上投射某种模式的光线。深度伪造技术要么无法将灯光效果展示在模拟图像中,要么反应速度太慢。法里德表示,只要让对方使用另一个光源——例如手机的手电筒,从另一角度照亮他们的脸,就可以达到类似的检测效果。

米尔斯基表示,要真实地模拟某个人做一些不寻常的事情,人工智能软件就需要看到几千个某人做这种事情的例子。但收集这么多的数据是很困难的。即便你成功训练AI软件完成了这些有挑战性的任务——比如拿起一根铅笔,从脸上划过,且不露破绽,那么只要你要求对方拿另一个东西代替铅笔(例如一个杯子),那么AI软件还是会失败。而且一般的诈骗分子也不太可能把假脸做到能够攻克“铅笔测试”和“侧脸测试”的地步。每个不同的任务都会增加AI模型训练的复杂性。“你希望深度伪造软件完善的方面是有限的。”米尔斯基说。

深度伪造技术也在日益进步

目前,很少有安全专家建议大家在视频通话前先验证身份——就像登陆很多网站要先填验证码一样。不过米尔斯基和法里德都认为,在一些重要场合,视频通话前先“验明正身”是有必要的,比如政治领导人之间的对话,或者有可能导致高额金额交易的对话。另外我们尤其要警惕一些反常的情形,例如陌生号码打来的电话,又或者人们的一些反常行为和要求。

法里德建议,对于一些非常重要的电话,你也可以使用简单的双因素认证,比如你能够同时给对方发条短信,问问他是不是正在跟你视频通话。

专家强调,深度伪造技术一直在进步,谁也不能保证将来它们会不会突破上面的检测手段,甚至是以上几种手段的组合。

正是考虑到了这一点,很多研究人员试图从另一角度解决深度伪造的问题——例如创建某种数字签名或者水印,来证明视频通话的真实性,而不是试图揭露深度伪造行为。

说到这里,就不得不提一个名叫“内容来源和真实性联合计划”(Coalition for Content Provenance and Authentication,简称C2PA)的机构,它是一个致力于建立数字媒体认证标准的基金会,该基金会得到了微软(Microsoft)、Adobe、索尼(Sony)和推特(Twitter)等公司的支持。法里德说:“我认为内容来源和真实性联合计划应该重视这个问题,他们已经为视频录制建立了规范,将它拓展到实时视频通话也是一件很自然的事情。”但法里德同时也承认,实视视频数据的验证并非一项简单的技术挑战。“我现在还不知道应该怎么做,但它是一个值得思考的问题。”

最后提醒大家,下次在Zoom软件上开电话会议的时候,记得带上一根铅笔。(财富中文网)

译者:朴成奎

下次你在Zoom上打视频电话的时候,你可以让对方把手指放在鼻子旁边,或者让对方将侧脸对着镜头保持一分钟。

这些都是专家推荐的方法,以确保你的聊天对象是真人,而不是用深度伪造(Deepfake)技术生成的假形象。

这种防范措施虽然显得有些莫名其妙,但是我们本来就生活在一个奇怪的年代。

今年8月,加密货币交易所币安(Binance)的一名高管表示,曾经有骗子利用深度伪造技术假冒他的形象,用来对数个虚拟币项目实施电信诈骗。币安的沟通总监帕特里克·希尔曼称,曾经有诈骗分子在Zoom视频电话上假冒他的形象。(希尔曼并未提供相关证据证实他的说法,一些专家对此表示怀疑,但网络安全研究人员表示,这类事件是有可能发生的。)美国联邦调查局(FBI)在今年7月曾经警告道,有人可能会在网络求职遭遇时对方使用深度造假技术诈骗。一个月前,欧洲的几位市长表示,他们也被假冒的乌克兰总统弗拉基米尔·泽伦斯基骗了。更离谱的是,美国的一家名叫Metaphysic的初创公司开发了一款深度伪造软件,它在电视真人秀《美国达人秀》(America’s Got Talent)的决赛中,直接在观众面前,将几名歌手的脸无缝切换成了西蒙·考威尔等几位明星评委的脸,让所有人惊掉下巴。

深度伪造,是指利用使用人工智能技术,创建极具说服力的虚假图像和视频。以前要创假这样一个虚假形象,需要目标对象的大量照片,还需要很多时间和相当高超的编程和特效技术。即便假期脸被生成出来了,以前的AI模型的响应速度也不够快,无法实时生成视频直播级的完美假脸。

然而从币安和《美国达人秀》的例子能够看出,现在的情况已经不同了,人们在实时视频传输中使用深度伪造技术已经越来越容易了,而且此类软件现在也是唾手可得,很多还是免费的,用起来也没有什么技术门槛。这也为各种各样的电信诈骗甚至政治谣言提供了可能。

加州大学伯克利分校(University of California at Berkeley)的计算机科学家哈尼·法里德是视频分析和认证领域的专家。他感叹道:“我对现在实时深度伪技术造的速度和质量感到惊讶。”他表示,现在至少有三种不同的开源程序可以让人们制作实时深度造假视频。

法里德等专家都担心深度伪造技术会使电信诈骗发展到一个新高度。“这简直就像给网络钓鱼诈骗打了兴奋剂。”他说。

识别深度造假的小技巧

好在专家表示,目前还是有很多小技巧能够帮助你拆穿骗子的画皮。最可靠也最简单的方法,就是让对方侧过脸去,让镜头捕捉他的完整侧脸。深度伪造技术目前还无法保证侧面不露破绽,最主要的原因就是很难获取足够多的侧面照片来训练AI模型。虽然有一些方法可以通过正面图像推导出侧面形象,但这会大大增加生成图像过程的复杂性。

另外,深度伪造软件还利用了人脸上的“锚点”,来将深度伪造的“面具”匹配到人脸上。所以只需要让对方转头90度,就会导致一半的锚点不可见,这通常就会导致图像扭曲、模糊、变形,非常容易注意到。

位于以色列的本古里安大学(Ben-Gurion University)进攻性人工智能实验室(Offensive AI Lab)的负责人伊斯罗尔·米尔斯基还通过试验发现了很多能够检测出深度伪造的方法。比如在视频通话过程中要求人们随便拿一个东西在脸前划过,让某个东西在他面前反弹一下,让他们整理一下自己的衬衫,摸一下头发,或者用手遮挡半张脸。以上每一种办法,都会导致深度造假软件无法描绘多出来的物体,或者导致人脸严重失真。对于音频深度造假,米尔斯基建议你可以要求对方吹口哨,或者换一种口音说话,或者随机挑一首曲子让对方哼唱。

米尔斯基指出:“目前所有现有的深度伪造技术都采用了非常类似的协议。它们虽然接受了大量数据的训练,但其模式是非常特定的。多数软件只能模仿人的正脸,而处理不好侧面或者遮挡脸部的物体。”

法里德也展示了一种检测深度伪造的方法,那就是用一个简单的软件程序,让对方的电脑屏幕以某种模式闪烁,让电脑屏幕在对方脸上投射某种模式的光线。深度伪造技术要么无法将灯光效果展示在模拟图像中,要么反应速度太慢。法里德表示,只要让对方使用另一个光源——例如手机的手电筒,从另一角度照亮他们的脸,就可以达到类似的检测效果。

米尔斯基表示,要真实地模拟某个人做一些不寻常的事情,人工智能软件就需要看到几千个某人做这种事情的例子。但收集这么多的数据是很困难的。即便你成功训练AI软件完成了这些有挑战性的任务——比如拿起一根铅笔,从脸上划过,且不露破绽,那么只要你要求对方拿另一个东西代替铅笔(例如一个杯子),那么AI软件还是会失败。而且一般的诈骗分子也不太可能把假脸做到能够攻克“铅笔测试”和“侧脸测试”的地步。每个不同的任务都会增加AI模型训练的复杂性。“你希望深度伪造软件完善的方面是有限的。”米尔斯基说。

深度伪造技术也在日益进步

目前,很少有安全专家建议大家在视频通话前先验证身份——就像登陆很多网站要先填验证码一样。不过米尔斯基和法里德都认为,在一些重要场合,视频通话前先“验明正身”是有必要的,比如政治领导人之间的对话,或者有可能导致高额金额交易的对话。另外我们尤其要警惕一些反常的情形,例如陌生号码打来的电话,又或者人们的一些反常行为和要求。

法里德建议,对于一些非常重要的电话,你也可以使用简单的双因素认证,比如你能够同时给对方发条短信,问问他是不是正在跟你视频通话。

专家强调,深度伪造技术一直在进步,谁也不能保证将来它们会不会突破上面的检测手段,甚至是以上几种手段的组合。

正是考虑到了这一点,很多研究人员试图从另一角度解决深度伪造的问题——例如创建某种数字签名或者水印,来证明视频通话的真实性,而不是试图揭露深度伪造行为。

说到这里,就不得不提一个名叫“内容来源和真实性联合计划”(Coalition for Content Provenance and Authentication,简称C2PA)的机构,它是一个致力于建立数字媒体认证标准的基金会,该基金会得到了微软(Microsoft)、Adobe、索尼(Sony)和推特(Twitter)等公司的支持。法里德说:“我认为内容来源和真实性联合计划应该重视这个问题,他们已经为视频录制建立了规范,将它拓展到实时视频通话也是一件很自然的事情。”但法里德同时也承认,实视视频数据的验证并非一项简单的技术挑战。“我现在还不知道应该怎么做,但它是一个值得思考的问题。”

最后提醒大家,下次在Zoom软件上开电话会议的时候,记得带上一根铅笔。(财富中文网)

译者:朴成奎

The next time you get on a Zoom call, you might want to ask the person you’re speaking with to push their finger into the side of their nose. Or maybe turn in complete profile to the camera for a minute.

Those are just some of the methods experts have recommended as ways to provide assurance that you are seeing a real image of the person you are speaking to and not an impersonation created with deepfake technology.

It sounds like a strange precaution, but we live in strange times.

In August, a top executive of the cryptocurrency exchange Binance said that fraudsters had used a sophisticated deepfake “hologram” of him to scam several cryptocurrency projects. Patrick Hillmann, Binance’s chief communications officer, says criminals had used the deepfake to impersonate him on Zoom calls. (Hillmann has not provided evidence to support his claim and some experts are skeptical a deepfake was used. Nonetheless, security researchers say that such incidents are now plausible.) In July, the FBI warned that people could use deepfakes in job interviews conducted over video conferencing software. A month earlier, several European mayors said they were initially fooled by a deepfake video call purporting to be with Ukrainian President Volodymyr Zelensky. Meanwhile, a startup called Metaphysic that develops deepfake software has made it to the finals of “America’s Got Talent,” by creating remarkably good deepfakes of Simon Cowell and the other celebrity judges, transforming other singers into the celebs in real-time, right before the audience’s eyes.

Deepfakes are extremely convincing fake images and videos created through the use of artificial intelligence. It once required a lot of images of someone, a lot of time, and a fair-degree of both coding skill and special effects know-how to create a believable deepfake. And even once created, the A.I. model couldn’t be run fast enough to produce a deepfake in real-time on a live video transmission.

That’s no longer the case, as both the Binance story and Metaphysics “America’s Got Talent” act highlight. In fact, it’s becoming increasingly easy for people to use deepfake software to impersonate others in live video transmissions. Software allowing someone to do this is now readily available, for free, and requires relatively little technical skill to use. And as the Binance story also shows, this opens the possibility for all kinds of fraud—and political disinformation.

“I am surprised by how fast live deepfakes have come and how good they are,” Hany Farid, a computer scientist at the University of California at Berkeley who is an expert in video analysis and authentication, says. He says there are at least three different open source programs that allow people to create live deepfakes.

Farid is among those who worry that live deepfakes could supercharge fraud. “This is going to be like phishing scams on steroids,” he says.

The “pencil test” and other tricks to catch an A.I. impostor

Luckily, experts say there are still a number of techniques a person can use to give themselves a reasonable assurance that they are not communicating with a deepfake impersonation. One of the most reliable is simply to ask a person to turn so that the camera is capturing her in complete profile. Deepfakes struggle with profiles for a number of reasons. For most people, there aren’t enough profile images available to train a deepfake model to reliably reproduce the angle. And while there are ways to use computer software to estimate a profile view from a front-facing image, using this software adds complexity to the process of creating the deepfake.

Deepfake software also uses “anchor points” on a person’s face to properly position the deepfake “mask” on top of it. Turning 90 degrees eliminates half of the anchor points, which often results in the software warping, blurring, or distorting the profile image in strange ways that are very noticeable.

Yisroel Mirsky, a researcher who heads the Offensive AI Lab at Israel’s Ben-Gurion University, has experimented with a number of other methods for detecting live deepfakes that he has compared to the CAPTCHA system used by many websites to detect software bots (you know, the one that asks you to pick out all the images of traffic lights in a photo broken up into squares). His techniques include asking people on a video call to pick up a random object and move it across their face, to bounce an object, to lift up and fold their shirt, to stroke their hair, or to mask part of their face with their hand. In each case, either the deepfake will fail to depict the object being passed in front of the face or the method will cause serious distortion to the facial image. For audio deepfakes, Mirsky suggests asking the person to whistle, or to try to speak with an unusual accent, or to hum or sing a tune chosen at random.

“All of today’s existing deepfake technologies follow a very similar protocol,” Mirsky says. “They are trained on lots and lots of data and that data has to have a particular pattern you are teaching the model.” Most A.I. software is taught to just reliably mimic a person’s face seen from the front and can’t handle oblique angles or objects that occlude the face well.

Meanwhile, Farid has shown that another way to detect possible deepfakes is to use a simple software program that causes the other person’s computer screen to flicker in a certain pattern or causes it to project a light pattern onto the face of the person using the computer. Either the deepfake will fail to transfer the lighting effect to the impersonation or it will be too slow to do so. A similar detection might be possible just by asking someone to use another light source, such as a smartphone flashlight, to illuminate their face from a different angle, Farid says.

To realistically impersonate someone doing something unusual, Mirsky says that the AI software needs to have seen thousands of examples of people doing that thing. But collecting a data set like that is difficult. And even if you could train the A.I. to reliably impersonate someone doing one of these challenging tasks—like picking up a pencil and passing it in front of their face—the deepfake is still likely to fail if you ask the person to use a very different kind of object, like a mug. And attackers using deepfakes are also unlikely to have been able to train a deepfake to overcome multiple challenges, like both the pencil test and the profile test. Each different task, Mirsky says, increases the complexity of the training the A.I. requires. “You are limited in the aspects you want the deepfake software to perfect,” he says.

Deepfakes are getting better all the time

For now, few security experts are suggesting that people will need to use these CAPTCHA-like challenges for every Zoom meeting they take. But Mirsky and Farid both said that people might be wise to use them in high-stakes situations, such as a call between political leaders, or a meeting that might result in a high-value financial transaction. And both Farid and Mirsky urged people to be attuned to other possible red flags, such as audio calls from unfamiliar numbers or people behaving strangely or making unusual requests.

Farid says that for very important calls, people might use some kind simple two-factor authentication, such as sending a text message to a mobile number you know to be the correct one for that person, asking if they are on a video call right now with you.

The researchers also emphasized that deepfakes are getting better all the time and that there is no guarantee that it won’t become much easier for them to evade any particular challenge—or even combinations of them—in the future.

That’s also why many researchers are trying to address the problem of live deepfakes from the opposite perspective—creating some sort of digital signature or watermark that would prove that a video call is authentic, rather than trying to uncover a deepfake.

One group that might work on a protocol for verifying live video calls is the Coalition for Content Provenance and Authentication (C2PA)—a foundation dedicated to digital media authentication standards that’s backed by companies including Microsoft, Adobe, Sony, Twitter. “I think the C2PA should pick this up because they have built specification for recorded video and extending it for live video is a natural thing,” Farid says. But Farid admits that trying to authenticate data that is being streamed in real-time is not an easy technological challenge. “I don’t see immediately how to do it, but it will interesting to think about,” he says.

In the meantime, remind the guests on your next Zoom call to bring a pencil to the meeting.