杰弗里·辛顿是科技界的开拓者,驱动ChatGPT等工具的人工智能的许多重要发展,都有他的功劳。目前,ChatGPT拥有数以百万计的用户。但这位75岁的开拓者表示,他后悔将毕生精力投入到这个领域,因为人工智能可能被滥用。

在《纽约时报》(New York Times)于5月1日发表的采访报道中,辛顿说:“我们很难找到阻止不法分子利用人工智能作恶的方法。我只能用一个标准的借口安慰自己:研究人工智能,如果我不做,也会有其他人去做。”

辛顿经常被称为“人工智能教父”,他从事过多年学术研究,2013年,谷歌(Google)以4,400万美元收购他的公司后,他加入谷歌。他对《纽约时报》表示,关于如何部署人工智能技术这个问题,谷歌一直是“适当的管理者”,而且该科技巨头始终以负责任的方式开发这项技术。但他在5月从谷歌离职,因此他可以自由谈论“人工智能的威胁”。

辛顿表示,他担心的主要问题之一是,人们很轻易就能够使用人工智能文本和图像生成工具,这可能导致更多虚假或欺诈性内容出现,而普通人“再也无法辨别真伪”。

对人工智能被不当利用的担忧已经成为现实。几周前,网络上开始流传教宗方济各身穿白色羽绒夹克的虚假图片。4月下旬,美国共和党全国委员会(Republican National Committee)发布了美国总统乔·拜登连任后银行倒闭的深度造假图片。

在OpenAI、谷歌和微软(Microsoft)等公司纷纷升级人工智能产品的同时,最近几个月有越来越多人呼吁放慢研发新技术的速度,并对快速扩张的人工智能领域实施监管。今年3月,包括苹果(Apple)的联合创始人史蒂夫·沃兹尼亚克和计算机科学家约书亚·本希奥等在内的多位科技界大佬发表公开信,要求禁止开发高级人工智能系统。辛顿并未签署公开信,但他认为公司在进一步扩大人工智能技术的规模之前应该慎重。

他说:“我认为,在确定能否控制人工智能之前,不应该继续扩大其规模。”

辛顿还担心,人工智能技术可能改变就业市场,导致非技术性岗位被边缘化。他警告道,人工智能有能力威胁更多类型的工作。

辛顿称:“它能够从事繁重枯燥的工作。可能还会有更多岗位被人工智能取代。”

对于辛顿的采访内容,谷歌强调公司承诺坚持“负责任的方式”。

谷歌的首席科学家杰夫·迪恩在一份声明中告诉《财富》杂志:“杰弗里·辛顿在人工智能领域取得了根本性的突破,我们感谢他十多年来为谷歌公司所做的贡献。作为最早发布人工智能准则的公司之一,我们依旧致力于以负责任的方式应用人工智能。我们将继续大胆创新,同时学习了解新出现的风险。”

辛顿并未立即答复《财富》杂志的置评请求。

人工智能的“关键时刻”

辛顿1972年从爱丁堡大学(University of Edinburgh)研究生毕业,开始了自己的职业生涯。他在爱丁堡大学开始从事神经网络研究。神经网络是大致模拟人脑工作原理的数学模型,可以分析海量数据。

辛顿的神经网络研究是他和两位学生创立的公司DNNresearch提出的突破性理念。这家公司于2013年被谷歌收购。辛顿和两位同事(其中一位是本希奥)凭借神经网络研究荣获2018年图灵奖[Turing Award,图灵奖相当于计算机界的诺贝尔奖(Nobel Prize)]。他们的研究成果对OpenAI的ChatGPT和谷歌的Bard聊天机器人等技术的诞生至关重要。

作为人工智能领域的重要思想家之一,辛顿认为目前是“关键”时刻,并且充满了机遇。辛顿在今年3月接受美国哥伦比亚广播公司(CBS)采访时指出,他认为人工智能创新的速度将超过我们控制它的速度,这令他深感忧虑。

在采访中,他对《早晨秀》(CBS Mornings)节目表示:“这件事情很棘手。你不希望由规模庞大的营利性公司决定什么是真实的。直到最近,我一直以为我们可能要在20年到50年之后,才能研发出通用人工智能。现在,我认为这个期限将缩短到20年甚至更短。”

辛顿还认为,我们可能很快就会看到计算机能够产生自我完善的想法。“真是个难题,对吧?我们必须认真思考如何控制这种变化。”

辛顿表示,在培训和呈现人工智能驱动产品方面,谷歌比微软更慎重,并且谷歌会提醒用户聊天机器人分享的信息。谷歌一直以来都是人工智能研究的引领者,直至最近生成式人工智能开始兴起。众所周知,谷歌母公司Alphabet的首席执行官桑达尔·皮查伊曾经将人工智能与塑造了人类的其他创新相提并论。

皮查伊在4月播出的一段采访中称:“我始终认为人工智能是人类正在研究的影响最深远的技术,它的意义将超过人类历史上掌握的火、电或其他技术。”虽然火有危险,但人类依旧学会了如何熟练地驾驭它,同样,皮查伊认为人类也可以驾驭人工智能。

他说:“关键在于什么是智能,什么是人类。我们正在开发的技术,未来将拥有人类前所未见的强大能力。”(财富中文网)

译者:刘进龙

审校:汪皓

杰弗里·辛顿是科技界的开拓者,驱动ChatGPT等工具的人工智能的许多重要发展,都有他的功劳。目前,ChatGPT拥有数以百万计的用户。但这位75岁的开拓者表示,他后悔将毕生精力投入到这个领域,因为人工智能可能被滥用。

在《纽约时报》(New York Times)于5月1日发表的采访报道中,辛顿说:“我们很难找到阻止不法分子利用人工智能作恶的方法。我只能用一个标准的借口安慰自己:研究人工智能,如果我不做,也会有其他人去做。”

辛顿经常被称为“人工智能教父”,他从事过多年学术研究,2013年,谷歌(Google)以4,400万美元收购他的公司后,他加入谷歌。他对《纽约时报》表示,关于如何部署人工智能技术这个问题,谷歌一直是“适当的管理者”,而且该科技巨头始终以负责任的方式开发这项技术。但他在5月从谷歌离职,因此他可以自由谈论“人工智能的威胁”。

辛顿表示,他担心的主要问题之一是,人们很轻易就能够使用人工智能文本和图像生成工具,这可能导致更多虚假或欺诈性内容出现,而普通人“再也无法辨别真伪”。

对人工智能被不当利用的担忧已经成为现实。几周前,网络上开始流传教宗方济各身穿白色羽绒夹克的虚假图片。4月下旬,美国共和党全国委员会(Republican National Committee)发布了美国总统乔·拜登连任后银行倒闭的深度造假图片。

在OpenAI、谷歌和微软(Microsoft)等公司纷纷升级人工智能产品的同时,最近几个月有越来越多人呼吁放慢研发新技术的速度,并对快速扩张的人工智能领域实施监管。今年3月,包括苹果(Apple)的联合创始人史蒂夫·沃兹尼亚克和计算机科学家约书亚·本希奥等在内的多位科技界大佬发表公开信,要求禁止开发高级人工智能系统。辛顿并未签署公开信,但他认为公司在进一步扩大人工智能技术的规模之前应该慎重。

他说:“我认为,在确定能否控制人工智能之前,不应该继续扩大其规模。”

辛顿还担心,人工智能技术可能改变就业市场,导致非技术性岗位被边缘化。他警告道,人工智能有能力威胁更多类型的工作。

辛顿称:“它能够从事繁重枯燥的工作。可能还会有更多岗位被人工智能取代。”

对于辛顿的采访内容,谷歌强调公司承诺坚持“负责任的方式”。

谷歌的首席科学家杰夫·迪恩在一份声明中告诉《财富》杂志:“杰弗里·辛顿在人工智能领域取得了根本性的突破,我们感谢他十多年来为谷歌公司所做的贡献。作为最早发布人工智能准则的公司之一,我们依旧致力于以负责任的方式应用人工智能。我们将继续大胆创新,同时学习了解新出现的风险。”

辛顿并未立即答复《财富》杂志的置评请求。

人工智能的“关键时刻”

辛顿1972年从爱丁堡大学(University of Edinburgh)研究生毕业,开始了自己的职业生涯。他在爱丁堡大学开始从事神经网络研究。神经网络是大致模拟人脑工作原理的数学模型,可以分析海量数据。

辛顿的神经网络研究是他和两位学生创立的公司DNNresearch提出的突破性理念。这家公司于2013年被谷歌收购。辛顿和两位同事(其中一位是本希奥)凭借神经网络研究荣获2018年图灵奖[Turing Award,图灵奖相当于计算机界的诺贝尔奖(Nobel Prize)]。他们的研究成果对OpenAI的ChatGPT和谷歌的Bard聊天机器人等技术的诞生至关重要。

作为人工智能领域的重要思想家之一,辛顿认为目前是“关键”时刻,并且充满了机遇。辛顿在今年3月接受美国哥伦比亚广播公司(CBS)采访时指出,他认为人工智能创新的速度将超过我们控制它的速度,这令他深感忧虑。

在采访中,他对《早晨秀》(CBS Mornings)节目表示:“这件事情很棘手。你不希望由规模庞大的营利性公司决定什么是真实的。直到最近,我一直以为我们可能要在20年到50年之后,才能研发出通用人工智能。现在,我认为这个期限将缩短到20年甚至更短。”

辛顿还认为,我们可能很快就会看到计算机能够产生自我完善的想法。“真是个难题,对吧?我们必须认真思考如何控制这种变化。”

辛顿表示,在培训和呈现人工智能驱动产品方面,谷歌比微软更慎重,并且谷歌会提醒用户聊天机器人分享的信息。谷歌一直以来都是人工智能研究的引领者,直至最近生成式人工智能开始兴起。众所周知,谷歌母公司Alphabet的首席执行官桑达尔·皮查伊曾经将人工智能与塑造了人类的其他创新相提并论。

皮查伊在4月播出的一段采访中称:“我始终认为人工智能是人类正在研究的影响最深远的技术,它的意义将超过人类历史上掌握的火、电或其他技术。”虽然火有危险,但人类依旧学会了如何熟练地驾驭它,同样,皮查伊认为人类也可以驾驭人工智能。

他说:“关键在于什么是智能,什么是人类。我们正在开发的技术,未来将拥有人类前所未见的强大能力。”(财富中文网)

译者:刘进龙

审校:汪皓

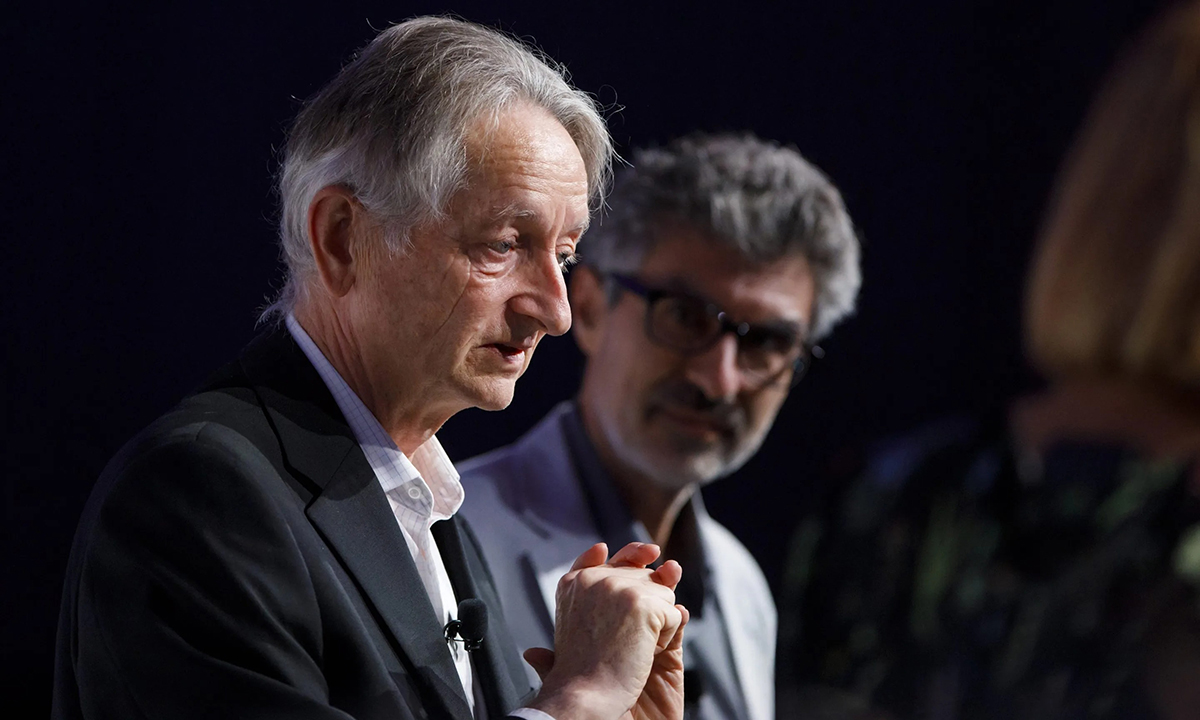

Geoffrey Hinton is the tech pioneer behind some of the key developments in artificial intelligence powering tools like ChatGPT that millions of people are using today. But the 75-year-old trailblazer says he regrets the work he has devoted his life to because of how A.I. could be misused.

“It is hard to see how you can prevent the bad actors from using it for bad things,” Hinton told the New York Times in an interview published on May 1. “I console myself with the normal excuse: If I hadn’t done it, somebody else would have.”

Hinton, often referred to as “the Godfather of A.I.,” spent years in academia before joining Google in 2013 when it bought his company for $44 million. He told the Times Google has been a “proper steward” for how A.I. tech should be deployed and that the tech giant has acted responsibly for its part. But he left the company in May so that he can speak freely about “the dangers of A.I.”

According to Hinton, one of his main concerns is how easy access to A.I. text- and image-generation tools could lead to more fake or fraudulent content being created, and how the average person would “not be able to know what is true anymore.”

Concerns surrounding the improper use of A.I. have already become a reality. Fake images of Pope Francis in a white puffer jacket made the rounds online a few weeks ago, and deepfake visuals showing banks failing under President Joe Biden if he is reelected were published by the Republican National Committee in late April.

As companies like OpenAI, Google, and Microsoft work on upgrading their A.I. products, there are also growing calls for slowing the pace of new developments and regulating the space that has expanded rapidly in recent months. In a March letter, some of the top names in the tech industry, including Apple cofounder Steve Wozniak and computer scientist Yoshua Bengio signed a letter asking for a ban on the development of advanced A.I. systems. Hinton didn’t sign the letter, although he believes that companies should think before scaling A.I. technology further.

“I don’t think they should scale this up more until they have understood whether they can control it,” he said.

Hinton is also worried about how A.I. could change the job market by rendering nontechnical jobs irrelevant. He warned that A.I. had the capability to harm more types of roles as well.

“It takes away the drudge work,” Hinton said. “It might take away more than that.”

When asked for a comment about Hinton’s interview, Google emphasized the company’s commitment to a “responsible approach.”

“Geoff has made foundational breakthroughs in A.I., and we appreciate his decade of contributions at Google,” Jeff Dean, the company’s chief scientist, told Fortune in a statement. “As one of the first companies to publish A.I. principles, we remain committed to a responsible approach to A.I. We’re continually learning to understand emerging risks while also innovating boldly.”

Hinton did not immediately return Fortune’s request for comment.

A.I.’s “pivotal moment”

Hinton began his career as a graduate student at the University of Edinburgh in 1972. That’s where he first started his work on neural networks, mathematical models that roughly mimic the workings of the human brain and are capable of analyzing vast amounts of data.

His neural network research was the breakthrough concept behind a company he built with two of his students called DNNresearch, which Google ultimately bought in 2013. Hinton won the 2018 Turing Award—the equivalent of a Nobel Prize in the computing world—with his two other colleagues (one of whom was Bengio) for their neural network research, which has been key to the creation of technologies including OpenAI’s ChatGPT and Google’s Bard chatbot.

As one of the key thinkers in A.I., Hinton sees the current moment as “pivotal” and ripe with opportunity. In an interview with CBS in March, Hinton said he believes that A.I. innovations are outpacing our ability to control it—and that’s a cause for concern.

“It’s very tricky things. You don’t want some big for-profit companies to decide what is true,” he told CBS Mornings in an interview in March. “Until quite recently, I thought it was going to be like 20 to 50 years before we have general purpose A.I. And now I think it may be 20 years or less.”

Hinton added that we could be close to computers being able to come up with ideas to improve themselves. “That’s an issue, right? We have to think hard about how you control that.”

Hinton said that Google is going to be a lot more careful than Microsoft when it comes to training and presenting A.I.-powered products and cautioning users about the information shared by chatbots. Google has been at the helm of A.I. research for a long time—well before the recent generative A.I. wave caught on. Sundar Pichai, CEO of Google parent Alphabet, has famously likened A.I. to other innovations that have shaped humankind.

“I’ve always thought of A.I. as the most profound technology humanity is working on—more profound than fire or electricity or anything that we’ve done in the past,” Pichai said in an interview aired in April. Just like humans learned to skillfully harness fire despite its dangers, Pichai thinks humans can do the same with A.I.

“It gets to the essence of what intelligence is, what humanity is,” Pichai said. “We are developing technology which, for sure, one day will be far more capable than anything we’ve ever seen before.”